Fun with Mesosphere and Microservices

Mesosphere (from Mesosphere.com) is a distributed computer operating systems (dcos) for managing clusters. In this article I will describe our experience installing and using Mesosphere on Microsoft’s Azure cloud. There are four major components to Mesosphere.

- Apache Mesos: a distributed system kernel.

- Marathon: this is the “init system” for Mesosphere. It starts and monitors applications and services and it automatically heals any failures.

- Mesos-DNS: a service discovery utility

- ZooKeeper: a high performance coordination service to manage the installed DCOS services.

When Mesosphere is deploy it has a master node, a backup master and a set of workers that run the service containers.

Figure 1. Mesosphere Architecture

Mesos has a concept of service and a separate concept of task. Marathon is considered a service and marathon manages the tasks on the worker nodes. Our microservices will be marathon tasks. Other Mesos services that can be launched with a dcos command include Chronos, Cassandra, HDFS, Kafka, Spark. One can even deploy Kubernetes as a Mesos service. For example, the dcos command to launch a spark service is

To launch a micros service (marathon task) packaged as a docker container you create a definition file that describes the deployment you want and issue the command

In the deployment section below we will provide specific details on how this works. Of course you can read much more about mesosphere and marathon at https://docs.mesosphere.com. It is also worth reading the original technical paper http://mesos.berkeley.edu/mesos_tech_report.pdf.

Mesosphere has a lovely web interface that can be used to monitor and manage various services. In addition, as we shall see, the web interface is very handy for scaling microservice tasks.

Figure 2. Mesosphere Web Interface

Installing and Running Mesosphere on Azure

Getting Mesosphere to install on the Azure cloud is extremely easy. The instructions are all here. https://docs.mesosphere.com/install/azurecluster/. That will get the Azure side running. The next thing you must do is install the DCOS command line interface. Instructions for that are here https://docs.mesosphere.com/install/cli/. You will need the url for the master node which can be found on the new Azure portal under the tab for masterPubicIPO. Loading http://you-master-addr into a browser will bring up the Mesosphere portal.

You will notice that the worker nodes on the cluster and the master are all on a VPN. The master has a public IP address, but the workers do not. You can ssh into the master and, if you need to ssh into a worker from the master with a command like

$ ssh –I mycert.pem core@10.0.0.x

where x is the last digit in the IP address for one of the workers and mycert.pem is the certificate you used to create the cluster. You will also see that your cluster is part of a new azure “resource group” and that the VMs do not appear in the old-style portal. Unfortunately, it is not possible to open additional ports on the master or the worker nodes. (I expect there is a way to open additional ports on the master, but I have not discovered how to do this!) Consequently for microservices running on the workers, communication is limited to the VPN.

Building and deploying a simple microservice.

To illustrate how you can build and deploy a microservice on the mesosphere cluster. One of the standard communication protocols is to use events based on AMQP. To illustrate this we will use a standard message queueing server RabbitMQ. The microservices will queue-up this AMQP server to receive event notifications. We can use this microservice as a way to process external events generated from instruments, sensors or mobile client applications as illustrated below.

Figure 3. A Microservice that responds to AMQP messages from external sources

The code for the service is in Python and it uses the package Pika for AMQP messaging.

Figure 4. Python code for basic AMQP listener “rabbit service”

Figure 4. Python code for basic AMQP listener “rabbit service”

The code is a simple template that subscribes to a topic queue “jobs” and waits for messages. If one is received without error, the service can then process the content of the message in the string “body”. If, for some reason the service loses the connection RabbitMQ broker an exception is thrown and it waits for 5 seconds and tries again.

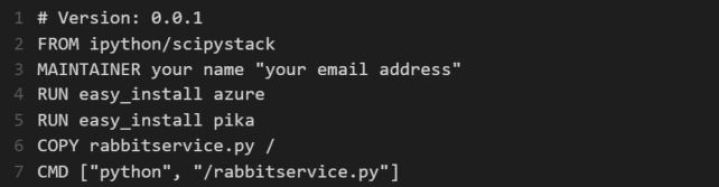

To create the Docker image we need a Dockerfile. We will use a very simple one

Figure 5. Dockerfile for rabbit service.

Notice we have built the image on top of a rather extensive (but large) image ipython/scipystack that is available in the Docker registry. We also install the Azure python tools and the Pika library. We will eventually need to have Mesosphere pull your image from the Docker registry, so you need to sign-up with Docker.com so that you can push your Docker container image up there. Once you have the account there are two commands. The first builds the image and the second command uploads it to the registry.

Figure 6. Dockerizing the services

The second command may take a while to complete. This is because the scipystack is rather large. You can may be able to find a smaller library, but I like the fact that so many good tools are included in scipystack.

The final step is executed from the dcos command line. But you must first create a file definition json file. Go to the directory <yourpath>\dcos\sample and create a directory for the rabbitservice and add this file as definition.json.

Figure 7. Definition.json file for rabbit service.

Then in that same directory execute the dcos command

$ dcos marathon app add definition.json

If you now go to the Mesosphere web page for your cluster you can go to the services page and you should see marathon running at the bottom. Clicking on marathon should show you the current microservices that marathon is current managing. Shown below is my cluster. I have two microservice types and each is running with 5 instances. Clicking on the name of your service will bring up details as shown below for my rabbitworker10 (a name change for rabbitservice … sorry for the confusion).

Figure 8. Mesosphere portal for Marathon service and “rabbitworker” microservice detail

If you have one instance running, you can click on the “Scale” button. By typing in the number of instances you want, Mesosphere will deploy them on the available resources in a reasonably load balanced way. It must be noted here that if an instance fails, marathon and the other Mesosphere services will create a new instance automatically.

Creating a Simple REST Web Microservice

There are dozens of ways to program a REST service and picking the right one depends upon how you intend to use it. Consider the following simple scenario. Our application will have two types of microservices

- One microservice “rabbitworker” processes events that are queued up in a message broker service. It then sends the processed events to a REST web service.

- The REST web service receives the events from the rabbitworker instances and does additional processing on them before putting the results in a database or table.

Figure 9. Single multi-threaded REST web service and two AMQP Rabbit listeners

The behavior of this application depends upon the arrival rate of the external events into the broker, the number of rabbitworker instances and the processing rate each has and the rate at which the web service can do its work. If the arrival rate is small, a single rabbitworker and a single REST web service may be sufficient. If the rate is a bit higher, the system may need two rabbitworkers and the web service may need to be multithreaded to handle concurrent requests. If the web services is stateless the best solution is to have an instance of it tied to each instance of the rabbit worker as shown below.

Figure 10. Kubernetes-style pods consisting of paired AMQP listener and REST web service

This solution is similar to the way pods are used in kubernetes. A single pod could contain a single instance of rabbitworker and the web service. Another advantage of this solution is that the web service could be single-threaded because it need not support many concurrent clients. In the case we have demonstrated here, we used the python package Bottle to build a simple single-threaded service as shown below.

Figure 11. Simple single-threaded REST web service

Notice that we have instructed the web service to come up on port 16666. To launch this microservice we need to create the docker image and push it to the docker registry as before. However this time we must specify more detail in the definition file to handle the network binding. The new definition.json file is below

Figure 12. definition.json file for web service specifying network and ports

We specified a “BRIDGE” network and port mappings. We launched five copies of the Bottle single-threaded version of the microservice. Because we specified a specific port binding, Marathon did not try to put more than one on each of our five worker servers. If we had only launched one instance our rabbitworker microservice would not know where to find it. However Marathon knows and we can have the rabbit worker issue a REST call to locate it. For example from the master node we can submit such a request as follows

$curl http://localhost:8080/v2/apps/mywebservice/tasks

The result is the IP address and port number of each node running the service.

A multi-threaded version of the web service can be built using Tornado as illustrated below

Figure 13. Multi-threaded web service based on Tornado

We found both methods worked with five rabbitworker instances, but the version with five simple bottle web services scaled better with high event arrival rates. A more quantitative analysis of this experiment claim will appear here later.